|

I am a final year Computer Science PhD candidate at Stony Brook University interested in research on vision-language modeling. My PhD thesis focuses on using vision-language representation learning and multimodal foundation models (e.g., multimodal LLMs) for modeling human visual attention (eye gaze). I am advised by Minh Hoai Nguyen, Dimitris Samaras and Gregory Zelinsky. I also collaborate with Niranjan Balasubramanian, Lester Loschky, Sidney D'Mello, and Sanjay Rebello. Previously, I was an NLP Engineer at Samsung Research Institute, Bangalore, where I worked as part of the Natural Language Understanding team. Before that, I was an undergraduate student at the Department of Computer Science & Engineering, Jadavpur University, Kolkata, working on action detection and recognition in videos. I have served as a reviewer for CVPR, ICCV, ICLR, NeurIPS and TPAMI. Résumé / Email / Google Scholar / LinkedIn |

|

|

|

I am broadly interested in Computer Vision, Natural Language Processing and Multimodal AI (Vision-Language Modeling). My PhD research focuses on using vision-language representation learning and multimodal foundation models (e.g., multimodal LLMs) for modeling human visual attention (eye gaze). For more details, refer to my résumé. |

|

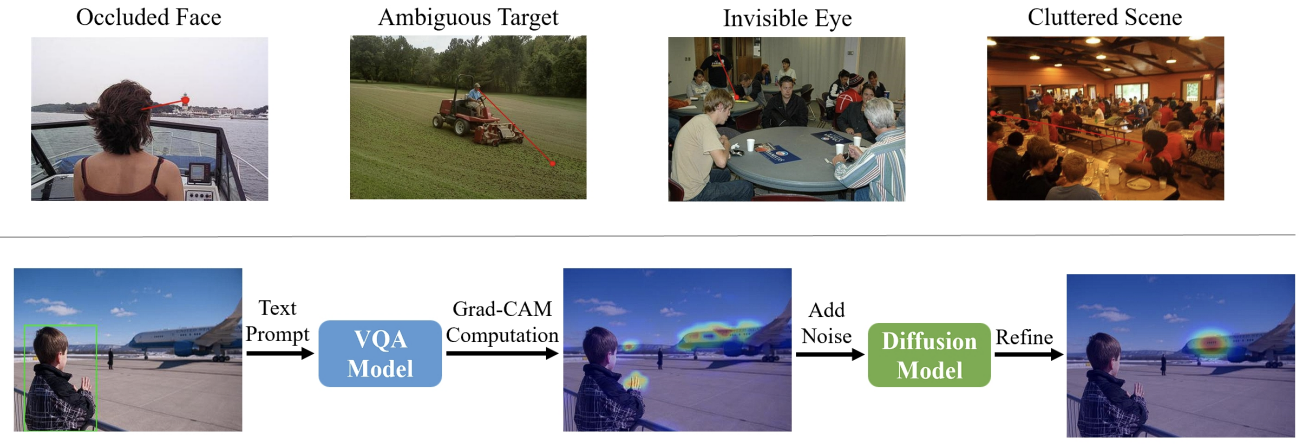

Sounak Mondal, Naveen Sendhilnathan, Ting Zhang, Yue Liu, Michael Proulx, Michael Iuzzolino, Chuan Qin, Tanya Jonker ICCV, 2025 Paper |

|

Ruoyu Xue, Jingyi Xu, Sounak Mondal, Hieu Le, Gregory Zelinsky, Minh Hoai, Dimitris Samaras CVPR, 2025 Paper |

|

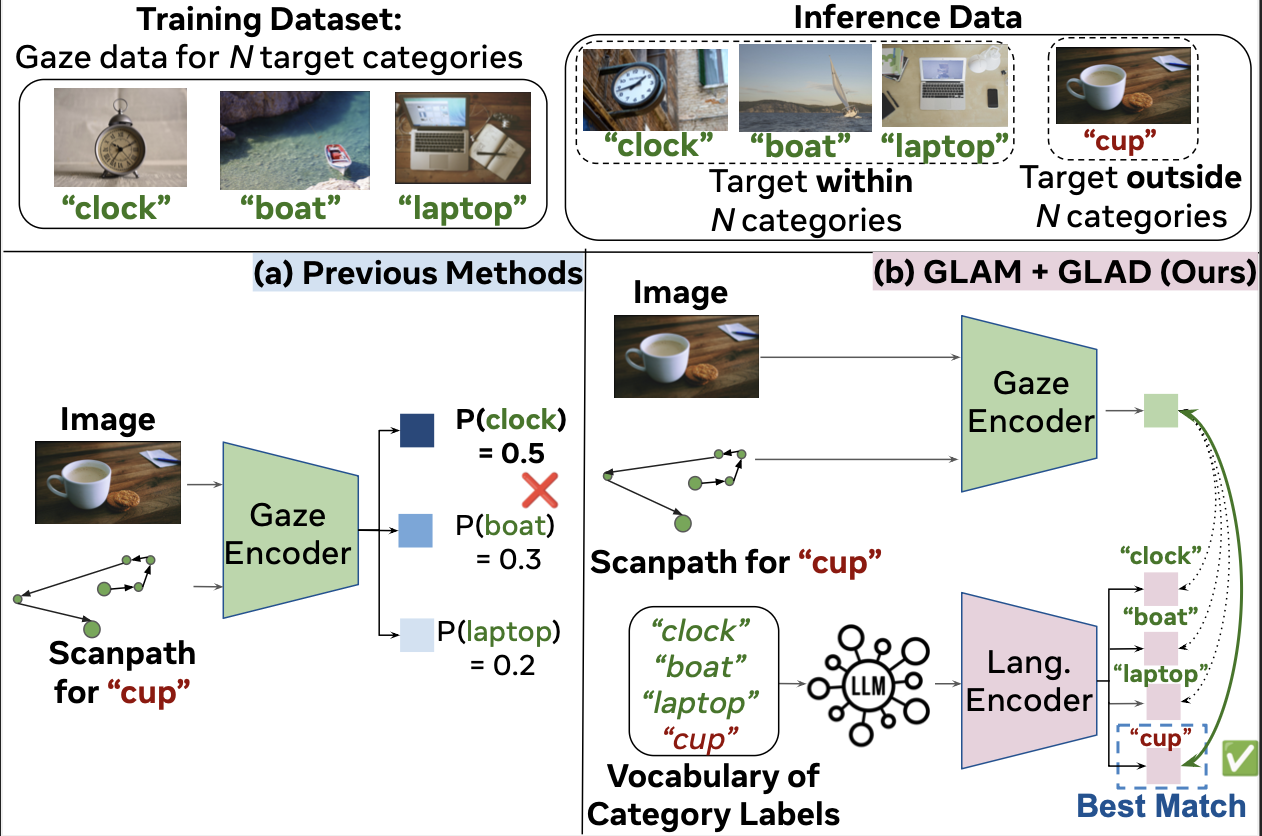

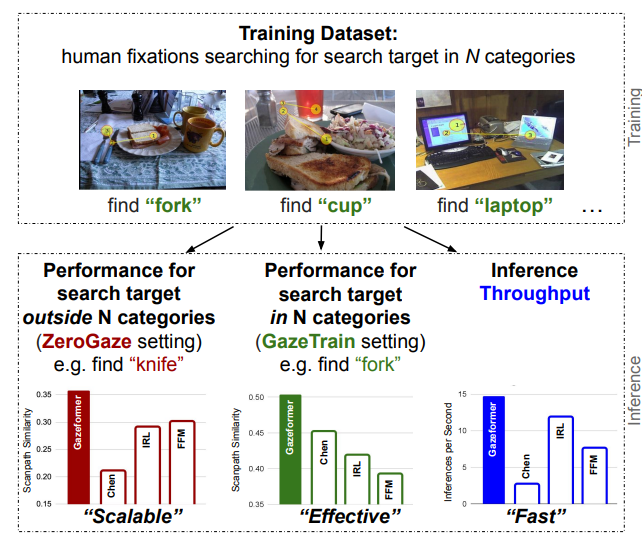

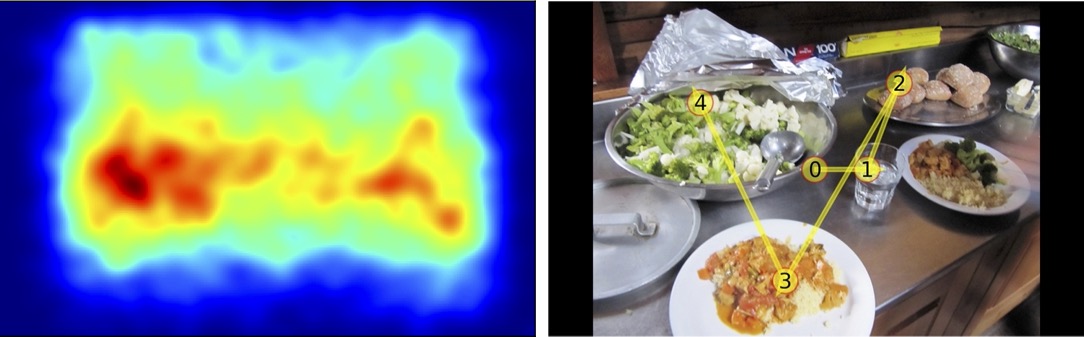

Sounak Mondal, Seoyoung Ahn, Zhibo Yang, Niranjan Balasubramanian, Dimitris Samaras, Gregory Zelinsky, Minh Hoai ECCV, 2024 arXiv / Project Page / Code / Dataset / Talk |

|

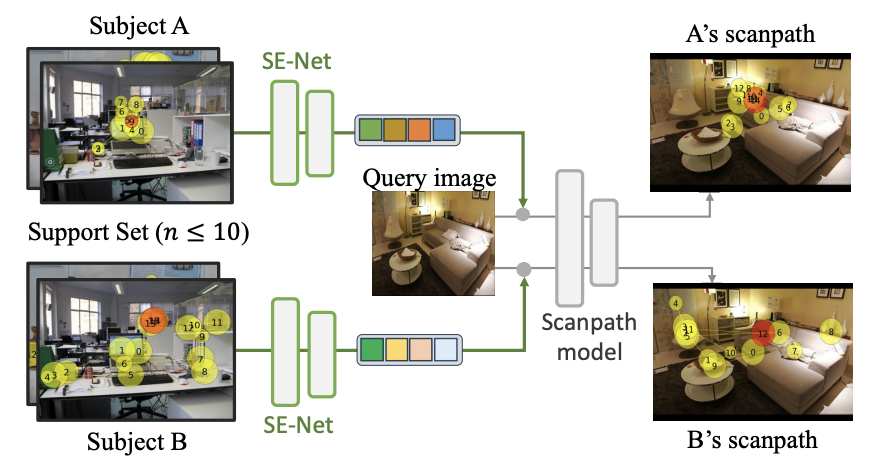

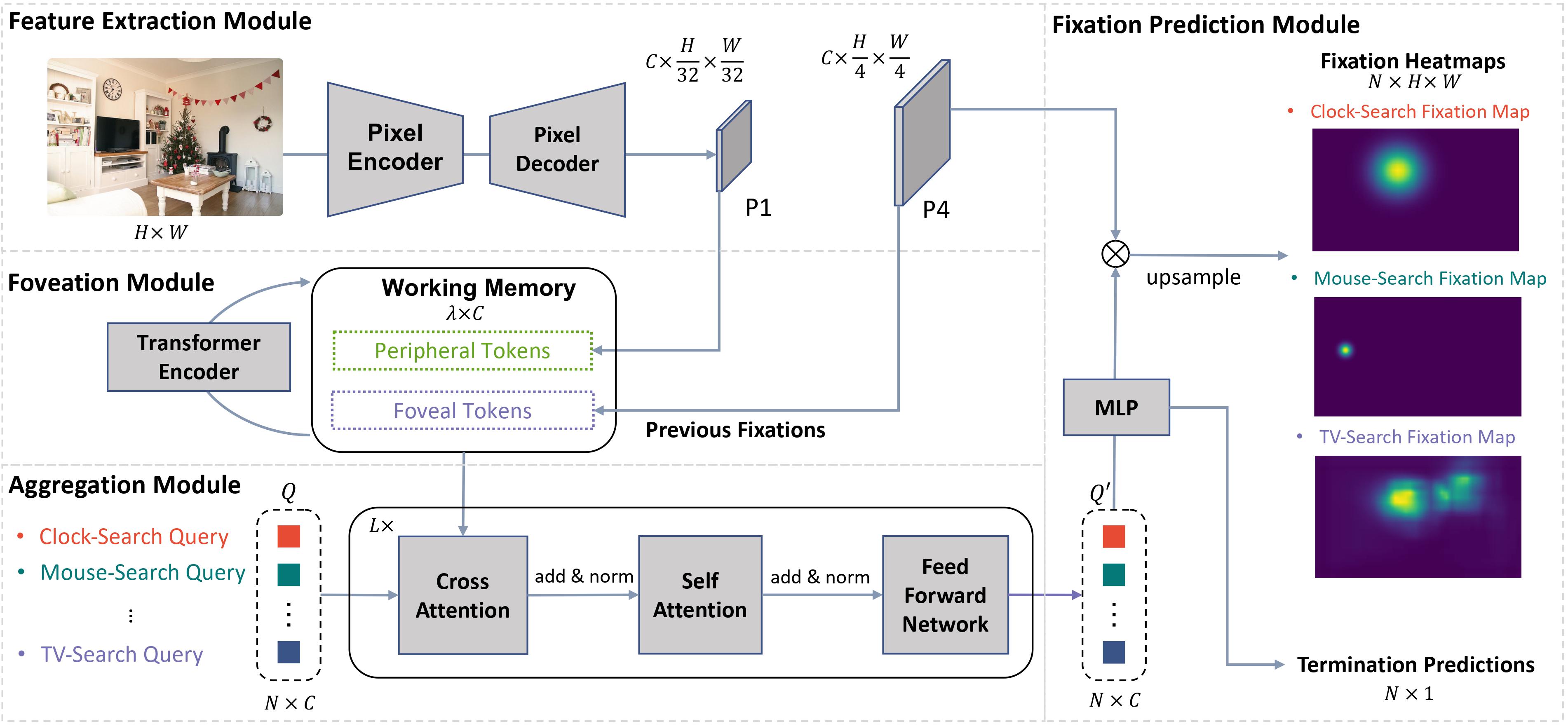

Qiaomu Miao, Alexandros Graikos, Jingwei Zhang, Sounak Mondal, Minh Hoai, Dimitris Samaras ECCV, 2024 arXiv |

|

Zhibo Yang, Sounak Mondal, Seoyoung Ahn, Ruoyu Xue, Gregory Zelinsky, Minh Hoai, Dimitris Samaras CVPR, 2024 arXiv |

|

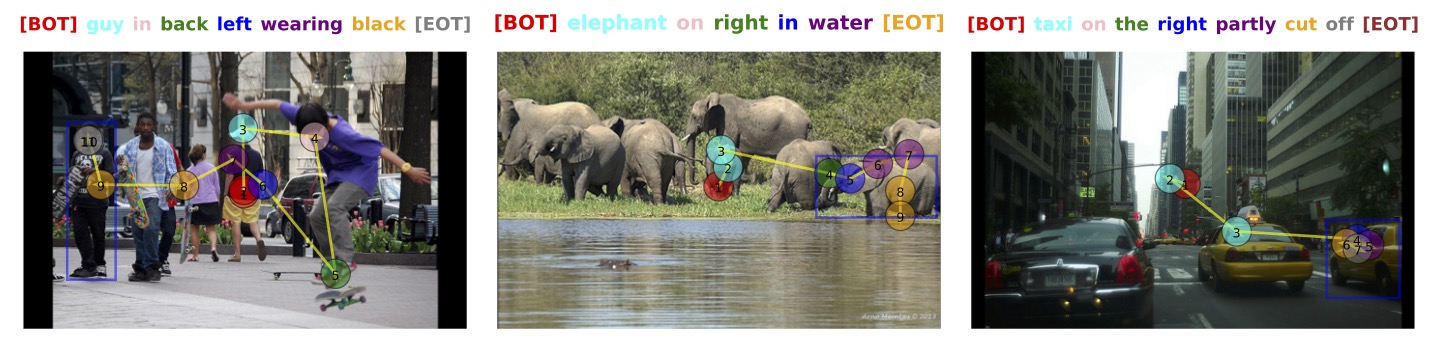

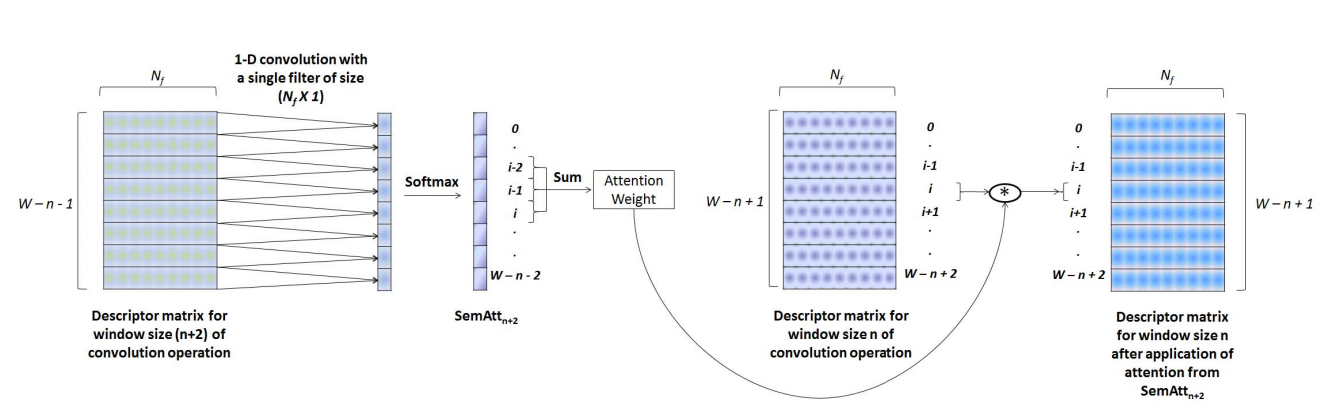

Sounak Mondal, Zhibo Yang, Seoyoung Ahn, Dimitris Samaras, Gregory Zelinsky, Minh Hoai CVPR, 2023 arXiv / Supplement / Code / Talk |

|

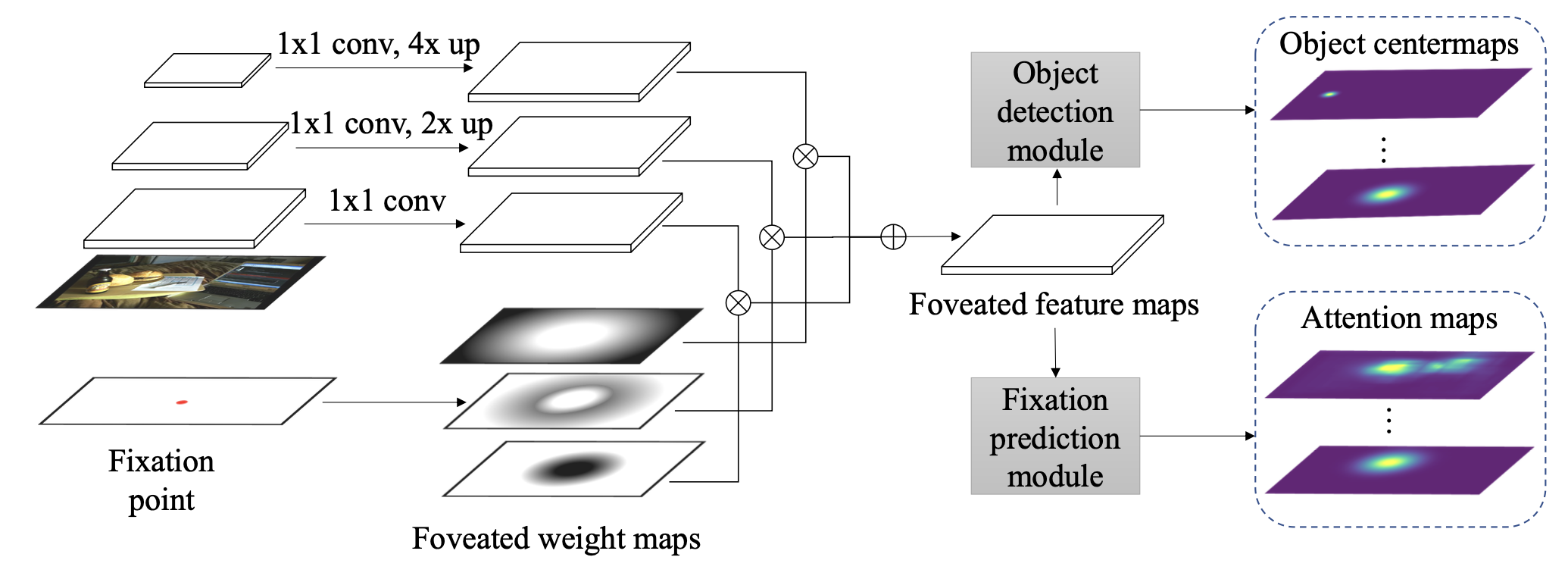

Zhibo Yang, Sounak Mondal, Seoyoung Ahn, Gregory Zelinsky, Minh Hoai, Dimitris Samaras ECCV, 2022 arXiv / Supplement / Code |

|

Yupei Chen, Zhibo Yang, Souradeep Chakraborty, Sounak Mondal, Seoyoung Ahn, Dimitris Samaras, Minh Hoai, Gregory Zelinsky CVPR Workshop, 2022 Paper / Supplement |

|

Sounak Mondal, Suraj Modi*, Sakshi Garg*, Dhruva Das, Siddhartha Mukherjee ICSC, 2020 (* indicates equal contribution) Paper |

|

Sounak Mondal, Soumyajit Pal, Sanjoy Kumar Saha, Bhabatosh Chanda ICAPR, 2017 Paper |

|

Soumyajit Pal, Sounak Mondal, Sanjoy Kumar Saha, Bhabatosh Chanda ICVGIP Workshop, 2016 Paper |

|

|